HDF5 in Igor Pro

HDF5 is a widely-used, very flexible data file format. The HDF5 file format is a creation of the National Center for Supercomputing Applications (NCSA). HDF5 is now supported by The HDF Group (http://www.hdfgroup.org).

Igor Pro includes deep support for HDF5, including:

-

Browsing HDF5 files

-

Loading data from HDF5 files

-

Saving data to HDF5 files

-

Saving Igor experiments as HDF5 files

-

Loading Igor experiments from HDF5 files

Prior to Igor Pro 9.00, HDF5 support was provided by an XOP that you had to activate. HDF5 support is now built into Igor and activation is no longer required.

Support for saving and loading Igor experiments as HDF5 files was added in Igor Pro 9.00. For details see HDF5 Packed Experiment Files.

Index of HDF5 Topics

Here are the main sections in the following material on HDF5:

HDF5 Guided Tour

This section will get you off on the right foot using HDF5 in Igor Pro.

In the following material you will see numbered steps that you should perform. Please perform them exactly as written so you stay in sync with the guided tour.

This tour in intended to help experienced Igor users learn how to access HDF5 files from Igor but also to entice non-Igor users to buy Igor. Therefore the tour is written assuming no knowledge of Igor.

HDF5 Overview

HDF5 is a very powerful but complex file format that is designed to be capable of storing almost any imaginable set of data and to encapsulate relationships between data sets.

An HDF5 file can contain within it a hierarchy similar to the hierarchy of directories and files on your hard disk. In HDF5, the hierarchy consists of "groups" and "datasets". There is a root group named "/". Each group can contain datasets and other groups.

An HDF5 dataset is a one-dimensional or multi-dimensional set of elements. Each element can be an "atomic" datatype (e.g., 16-bit signed integer or a 32-bit IEEE float) or a "composite" datatype such as a structure or an array. A "compound" datatype is a composite datatype similar to a C structure. Its members can be atomic datatypes or composite datatypes. For now, forget about composite datatypes - we will deal with atomic datatypes only.

Each dataset can have associated with it any number of "attributes". Attributes are like datasets but are attached to datasets, or to groups, rather than being part of the hierarchy.

Igor Pro HDF5 Support

The Igor Pro HDF5 package consists of built-in HDF5 support and a set of Igor procedures.

The Igor procedures, which are automatically loaded when Igor is launched, implement an HDF5 browser in Igor. The browser supports:

-

Previewing HDF5 file data

-

Loading HDF5 datasets and groups into Igor

-

Saving Igor waves and data folders in HDF5 files

In Igor Pro 9 and later, Igor can save Igor experiments as HDF5 packed experiment files and reload experiments from them.

Using the HDF5 Browser

-

Choose Data→Load Waves→New HDF5 Browser.

This displays an HDF5 browser control panel.

As you can see, the HDF5 Browser takes up a bit of screen space. You will need to arrange it and this help window so you can see both.

-

Click the Open HDF5 File button and open the following file:

Igor Pro Folder\Examples\Feature Demos\HDF5 Samples\TOVSB1NF.h5(If you're not sure where your Igor Pro Folder is, choose Misc→Path Status, click on the Igor symbolic path, and note the path to the Igor Pro Folder.)

We got this sample from the NCSA web site.

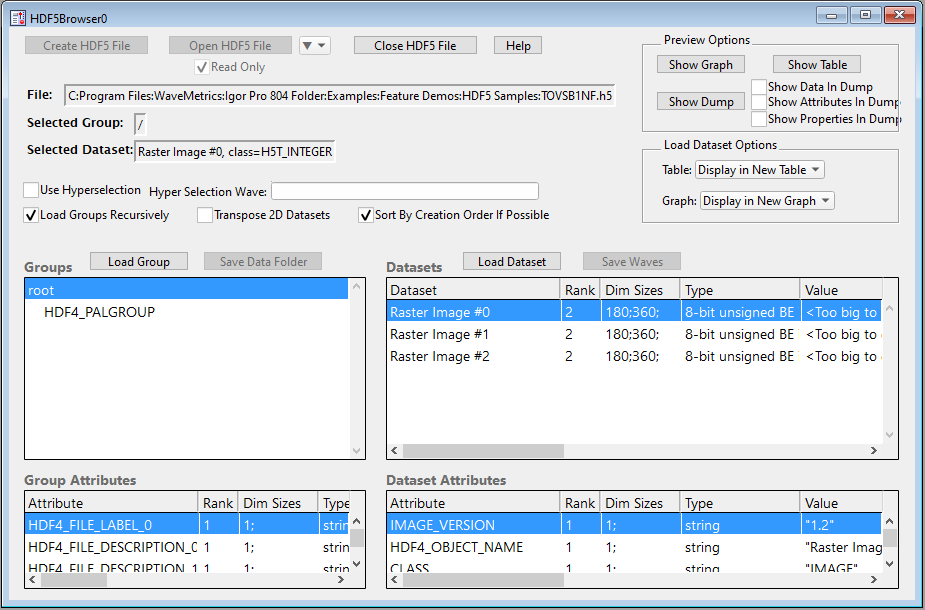

You should now see something like this:

The browser contains four lists.

The top/left list is the Groups list and shows the groups in the HDF5 file. Groups in an HDF5 file are analogous to directories in a hard disk hierarchy. In this case there are two groups, root (which is called "/" in HDF5 terminology) and HDF4_PALGROUP. HDF4_PALGROUP is a subgroup of root.

This file contains a number of objects with names that begin with HDF4 because it was created by converting an HDF4 file to HDF5 format using a utility supplied by The HDF Group.

Below the Groups list is the Group Attributes list. In the picture above, the root group is selected so the Group Attributes list shows the attributes of the root group. An attribute is like a dataset but is attached to a group or dataset instead of being part of the HDF5 file hierarchy. Attributes are usually used to save small snippets of information related to a group or dataset.

The top/right list is the Datasets list. This lists the datasets in the selected group, root in this case. In the root group of this file we have three datasets all of which are images.

Below the Datasets list is the Dataset Attributes list. It shows the attributes of the selected dataset, Raster Image #0 in this case.

Three of the lists have columns that show information about the items in the list.

-

Familiarize yourself with the information listed in the columns of the lists.

To see all the information you will need to either scroll the list and/or resize the entire HDF5 browser window to make it larger.

-

In the Groups list, click the subgroup and notice that the information displayed in the other lists changes.

-

In the Groups list, click the root group again.

Now we will see how to browse a dataset.

-

Click the Show Graph, Show Table and Show Dump buttons and arrange the three resulting windows so that they can all be seen at least partially.

These browser preview windows should typically be kept fairly small as they are intended just to provide a preview. It is usually convenient to position them to the right of the HDF5 browser.

The three windows are blank now. They display something only when you click on a dataset or attribute.

-

In the Datasets list, click the top dataset (Raster Image #0).

The dataset is displayed in each of the three preview windows.

The dump window shows the contents of the HDF5 file in "Data Description Language" (DDL). This is useful for experts who want to see the format details of a particular group, dataset or attribute. The dump window will be of no interest in most everyday use.

If you check the Show Data in Dump checkbox and then click a very large dataset, it will take a very long time to dump the data into the dump window. Therefore you should avoid checking the Show Data in Dump checkbox.

The preview graph and table, not surprisingly, allow you to preview the dataset in graphical and tabular form.

This dataset is a special case. It is an image formatted according to the HDF5 Image and Palette Specification which requires that the image have certain attributes that describe it. You can see these attributes in the Dataset Attributes list. They are named CLASS, IMAGE_VERSION, IMAGE_SUBCLASS and PALETTE. The HDF5 Browser uses the information in these attributes to make a nice preview graph.

An HDF5 file can contain a 2D dataset without the dataset being formatted according to the HDF5 Image and Palette Specification. In fact, most HDF5 files do not follow that specification. We use the term "formal image" to make clear that a particular dataset is formatted according to the HDF5 Image and Palette Specification and to distinguish it from other 2D datasets which may be considered to be images.

-

In the Dataset Attributes list, click the CLASS attribute.

The value of the selected attribute is displayed in the preview windows.

Try clicking the other image attributes, IMAGE_VERSION, IMAGE_SUBCLASS and PALETTE.

So far we have just previewed data, we have not loaded it into Igor. (Actually, it was loaded into Igor and stored in the root:Packages:HDF5Browser data folder, but that is an HDF5 Browser implementation detail.)

Now we will load the data into Igor for real.

-

Make sure that the Raster Image #0 dataset is selected, that the Table popup menu is set to Display In New Table and that the Graph popup menu is set to Display In New Graph. Then click the Load Dataset button.

The HDF5 Browser loads the dataset (and its associated palette, because this is a formal image with an associated palette dataset) into the current data folder in Igor and creates a new graph and a new table.

-

Choose Data→Data Browser and note the two "waves" in the root data folder.

"Wave" is short for "waveform" and is our term for a dataset. This terminology stems from our roots in time series signal processing.

The two waves, 'Raster Image #0' and 'Raster Image #0Pal' were loaded when you clicked the Load Dataset button. The graph was set up to display 'Raster Image #0' using 'Raster Image #0Pal' as a palette wave.

-

Back in the HDF5 Browser, with the root group still selected, click the Load Group button.

The HDF5 Browser created a new data folder named TOVSB1NF and loaded the contents of the HDF5 root group into the new data folder which can be seen in the Data Browser. The name TOVSB1NF comes from the name of the HDF5 file whose root group we just loaded.

When you load a group, the HDF5 Browser does not display the loaded data in a graph or table. That is done only when you click Load Dataset and also depends on the Load Dataset Options controls.

-

Click the Close HDF5 File button and then click the close box on the HDF5 Browser.

If you had closed the HDF5 browser without clicking the Close HDF5 File button, the browser would have closed the file anyway. It will also automatically close the file if you choose File→New Experiment or File→Open Experiment or if you quit Igor.

Next we will learn how to write an Igor procedure to access an HDF5 file programmatically. Before you start that, feel free to play with the HDF5 Browser on your own HDF5 files. Start by choosing Data→Load Waves→New HDF5 Browser.

Igor can handle most HDF5 files that you will encounter, but it cannot handle all possible HDF5 files. If you receive an error while examining your own files, it may be because of a bug or because Igor does not support a feature used in your file. In this case you can send the file along with a brief explanation of the problem to support@wavemetrics.com and we will determine whether the problem is a bug or just a limitation.

Loading HDF5 Data Programmatically

Igor includes a number of operations and functions for accessing HDF5 files. To get an idea of the range and scope of the operations, click the link below. Then return here to continue the guided tour.

In this section, we will load an HDF5 dataset using a user-defined function.

-

Choose File→New Experiment.

You will be asked if you want to save the current experiment. Click No (or Yes if you want to save your current work environment).

This closes the current experiment, killing any waves and windows.

-

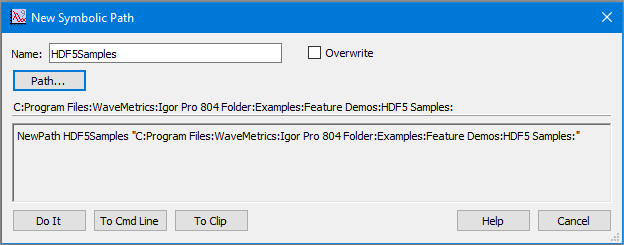

Choose Misc→New Path and create an Igor symbolic path named HDF5Samples and pointing to the HDF5 Samples directory which contains the TOVSB1NF.h5 file that we used in the preceding section.

The New Path dialog will look something like this:

Click Do It to create the symbolic path.

An Igor symbolic path is a short name that references a specific directory on disk. We will use this symbolic path in a command that opens an HDF5 file.

In HDF5, you must first open a file or create a new file. The HDF5 library returns a file ID that you use in subsequent calls to the library. When you are finished, you must close the file. The following example illustrates this three step process.

-

Choose Windows→Procedure Window and paste the following into the Procedure window:

Function TestLoadDataset(datasetName)

String datasetName // Name of dataset to be loaded

Variable fileID // HDF5 file ID will be stored here

Variable result = 0 // 0 means no error

// Open the HDF5 file

HDF5OpenFile /P=HDF5Samples /R /Z fileID as "TOVSB1NF.h5"

if (V_flag != 0)

Print "HDF5OpenFile failed"

return -1

endif

// Load the HDF5 dataset

HDF5LoadData /O /Z fileID, datasetName

if (V_flag != 0)

Print "HDF5LoadData failed"

result = -1

endif

// Close the HDF5 file

HDF5CloseFile fileID

return result

EndRead through the procedure. Notice that we use the symbolic path HDF5Samples created above as a parameter to the HDF5OpenFile operation.

The /R flag was used to open the file for read-only so that we don't get an error if the file cannot be opened for writing.

The /Z flag tells HDF5OpenFile that, if there is an error, it should set the variable V_flag to a non-zero value but return to Igor as if no error occurred. This allows us to handle the error gracefully rather than having Igor abort execution and display an error dialog.

HDF5OpenFile sets the local variable fileID to a value returned from the HDF5 library. We pass that value to the HDF5LoadData operation. We also provide the name of a dataset within that file to be loaded. Again we check the V_flag variable to see if an error occurred.

Finally we call HDF5CloseFile to close the file that we opened. It is important to always close files that we open. If, during development of a procedure, you forget to close a file or fail to close a file because of an error, you can close all open HDF5 files by executing:

HDF5CloseFile /A 0Also, Igor automatically closes all open files if you choose File→New Experiment, File→Revert Experiment or File→Open Experiment or if you quit Igor.

-

Click the Compile button at the bottom of the Procedure window.

If you don't see a Compile button at the bottom of the Procedure window, that is because the procedures were already automatically compiled when you deactivated the Procedure window.

If you get a compile error then you have not pasted the right text into the Procedure window or you have entered other incorrect text. Try going back to step 1 of this section.

Now we are ready to run our procedure.

-

Execute the following command in the Igor command line either by typing it and pressing Enter, by copy/paste followed by Enter, or by selecting it and pressing Ctrl-Enter:

TestLoadDataset("Raster Image #0")At this point the procedure should have correctly executed and you should see no error messages printed in the history area (just above the command line). This history area of the command window should contain:

Waves loaded by HDF5LoadData: Raster Image #0

Now we will verify that the data was loaded.

-

Choose Data→Data Browser. Then double-click the wave named 'Raster Image #0'.

This creates a table showing the data just loaded.

At this point Igor's root data folder contains just one wave, 'Raster Image #0', and does not contain the corresponding palette wave. That's because we loaded the dataset as a plain dataset, not as a formal image. The HDF5LoadData operation does not know about formal images. For that we need to use HDF5LoadImage.

-

In the Data Browser, right-click the 'Raster Image #0' wave and choose New Image.

Igor creates an image plot of the wave.

Because we loaded 'Raster Image #0' as a plain dataset, not as a formal image, the image plot is gray instead of colored. Also, it is rotated 90 degrees relative to the image plot we saw earlier in the tour. That's because Igor plots 2D data different from most programs and the HDF5LoadData operation does not compensate by default.

Our procedure is of rather limited value because it is hard-coded to use a specific symbolic path and a specific file name. We will now make it more general.

Before we do that, we will save the current work environment so that, if you make a mistake, you can revert to a version of it that worked.

-

Choose File→Save Experiment As and save the current work environment in a new Igor experiment file named "HDF5 Tour.pxp" in a directory where you store your own files.

This saves all of your data and procedures in a single file. If need be, later you can revert to the saved state by choosing File→Revert Experiment.

-

Open the Procedure window (Windows→Procedure Window) and replace the first two lines of the TestLoadDataset function with this:

Function TestLoadDataset(pathName, fileName, datasetName)

String pathName // Name of symbolic path

String fileName // Name of HDF5 file

String datasetName // Name of dataset to be loaded -

Change the HDF5OpenFile command to this:

HDF5OpenFile /P=$pathName /R /Z fileID as fileNameHere we replaced HDF5Samples with $pathName. $pathName tells Igor to use the contents of the string parameter pathName as the parameter for the /P flag. We also replaced "TOVSB1NF.h5" with fileName so that we can specify the file to be loaded when we call the function instead of when we code it.

-

Click the Compile button and then execute this in the command line:

TestLoadDataset("HDF5Samples", "TOVSB1NF.h5", "Raster Image #0")This does the same thing as the earlier version of the function but now we have a more general function that can be used on any file in any directory.

The command above reloaded the 'Raster Image #0' dataset into Igor, overwriting the previous contents. Since the new contents is identical to the previous contents, the graph and table did not change.

-

Choose File→Save Experiment to save your current work environment in the HDF5 Tour.pxp experiment file that you created earlier.

You can now take a break and quit Igor if you want.

Saving HDF5 Data Programmatically

Now that we have seen how to programmatically load HDF5 data we will turn our attention to saving Igor data in an HDF5 file.

In this section, we will create some Igor data and save it in an HDF5 dataset from a user-defined function.

-

If the HDF5 Tour.pxp experiment that you previously saved is not already open, open it by choosing File→Recent Experiments→HDF5 Tour.pxp.

Next we will create some data that we can save in an HDF5 file. We will create the data in a new Igor data folder to keep it separate from our other data.

-

Choose Data→Data Browser. Click the New Data Folder button. Enter the name Test Data, click the Set As Current Data Folder and click OK.

The Data Browser shows the new data folder and a red arrow pointing to it. The red arrow indicates the current data folder. Operations that do not explicitly address a specific data folder work in the current data folder.

-

On Igor's command line, execute these commands:

Make/N=100 data0, data1, data2

SetScale x 0, 2*PI, data0, data1, data2

data0=sin(x); data1=2*sin(x+PI/6); data2=3*sin(x+PI/4)

Display data0, data1, data2The SetScale operation set the X scaling for each wave to run from 0 to 2π. The symbol x in the wave assignment statements takes on the X value for each point in the destination wave as the assignment is executed.

(If you have not already done it, later you should do the Igor guided tour by choosing Help→Getting Started. It explains X scaling and wave assignment statements as well as many other Igor features.)

-

Choose Misc→New Path and create an Igor symbolic path named HDF5Data pointing to a directory on your hard disk where you will save data.

Choose Misc→Path Status and verify that the HDF5Data symbolic path exists and points to the intended directory.

Now that we have done some more work worth saving we will save the current experiment so we can revert to a known good state if necessary.

-

Choose File→Save Experiment to save your current work environment in the HDF5 Tour.pxp experiment file that you created earlier.

If need be, later you can revert to the saved state by choosing File→Revert Experiment.

-

Choose Windows→Procedure Window and paste the following into the Procedure window below the TestLoadDataset function:

Function TestSaveDataset(pathName, fileName, w)

String pathName // Name of symbolic path

String fileName // Name of HDF5 file

Wave w // The wave to be saved

Variable result = 0 // 0 means no error

Variable fileID

// Create a new HDF5 file, overwriting if same-named file exists

HDF5CreateFile/P=$pathName /O /Z fileID as fileName

if (V_flag != 0)

Print "HDF5CreateFile failed"

return -1

endif

// Save wave as dataset

HDF5SaveData /O /Z w, fileID

if (V_flag != 0)

Print "HDF5SaveData failed"

result = -1

endif

// Close the HDF5 file

HDF5CloseFile fileID

return result

EndRead through the procedure. It should look familiar as it is similar to the TestLoadDataset function we wrote before. Again it has parameters that specify a symbolic path and file name. It does not have a parameter to specify the dataset name because HDF5SaveData uses the wave name as the dataset name unless instructed otherwise. This function has a wave parameter through which we will specify the wave whose data is to be written to the HDF5 file.

Here we use HDF5CreateFile to create a new file rather than HDF5OpenFile. HDF5CreateFile creates a new file and opens it, returning a file ID. If you wanted to add a dataset to an existing file you would use HDF5OpenFile instead of HDF5CreateFile.

HDF5CreateFile sets the local variable fileID to a value returned from the HDF5 library. We pass that value to the HDF5SaveData operation. The /O flag means that, if there is already a dataset with the same name, it will be overwritten.

Finally we call HDF5CloseFile to close the file that we opened via HDF5CreateFile.

-

Click the Compile button at the bottom of the Procedure window.

If you get an error then you have not pasted the right text into the Procedure window or you have entered other incorrect text. If you cannot find the error, choose File→Revert Experiment and go back to step 6 of this section.

Now we are ready to run our procedure.

-

Execute the following command in the Igor command line either by typing it and pressing Enter, by copy/paste followed by Enter, or by selecting it and pressing Ctrl-Enter:

TestSaveDataset("HDF5Data", "SaveTest.h5", data0)At this point the procedure should have correctly executed and you should see no error messages printed in the history area of the command window (just above the command line).

Now we will verify that the data was saved.

-

Choose Data→Load Waves→New HDF5 Browser. Click the Open HDF5 File button and open the SaveTest.h5 file that we just created.

Verify that the file contains a dataset named data0.

The data0 dataset has some attributes. These attributes allow HDF5LoadData to fully recreate the wave and all of its properties if you ever load it back into Igor. If you don't want to save these attributes you can use the /IGOR=0 flag when calling HDF5SaveData.

-

Click the Close HDF5 File button.

We can't write more data to the file while it is open in the HDF5 Browser.

The TestSaveDataset function is rather limited because it saves just one wave. We will now make it more general so that it can save any number of waves. But first we will save our work since it is in a known good state.

-

Choose File→Save Experiment to save your current work environment in the HDF5 Tour.pxp experiment file that you created earlier.

If need be, later you can revert to the saved state by choosing File→Revert Experiment.

Next we will create a new, more general user function with a different name (TestSaveDatasets instead of TestSaveDataset).

-

Choose Windows→Procedure Window and paste the following into the Procedure window below the TestSaveDataset function:

Function TestSaveDatasets(pathName, fileName, listOfWaves)

String pathName // Name of symbolic path

String fileName // Name of HDF5 file

String listOfWaves // Semicolon-separated list of waves

Variable result = 0 // 0 means no error

Variable fileID

// Create a new HDF5 file, overwriting if same-named file exists

HDF5CreateFile/P=$pathName /O /Z fileID as fileName

if (V_flag != 0)

Print "HDF5CreateFile failed"

return -1

endif

String listItem

Variable index = 0

do

listItem = StringFromList(index, listOfWaves)

if (strlen(listItem) == 0)

break // No more waves

endif

// Create a local reference to the wave

Wave w = $listItem

// Save wave as dataset

HDF5SaveData /O /Z w, fileID

if (V_flag != 0)

Print "HDF5SaveData failed"

result = -1

break

endif

index += 1

while(1)

// Close the HDF5 file

HDF5CloseFile fileID

return result

EndRead through the procedure. It is similar to the TestSaveDataset function.

The first difference is that, instead of passing a wave, we pass a list of wave names in a string parameter. This parameter is a semicolon-separated list which is a commonly-used programming technique in Igor.

The next difference is that we have a do-while loop which extracts a name from the list and saves the corresponding wave as a dataset in the HDF5 file.

The statement

Wave w = $listItemcreates a local "wave reference" and allows us to use w to refer to a wave whose identity is determined by the contents of the listItem string variable. This also is a common Igor programming technique and is explained in detail in the Programming help file.

-

Click the Compile button at the bottom of the Procedure window.

If you get an error then you have not pasted the right text into the Procedure window or you have entered other incorrect text. If you cannot find the error, choose File→Revert Experiment and go back to step 12 of this section.

Now we are ready to run our procedure.

-

Enter the following command in the Igor command line either by typing it and pressing enter, by copy/paste followed by Enter, or by selecting it and pressing Ctrl-Enter:

TestSaveDatasets("HDF5Data", "SaveTest.h5", "data0;data1;data2;")The third parameter is a string in this function. In the previous example, it was a name, specifically the name of a wave. Strings are entered in double-quotes but names are not.

At this point the procedure should have correctly executed and you should see no error messages printed in the history area of the command window (just above the command line).

Now we will verify that the data was saved.

-

Activate the HDF5 Browser window. Click the Open HDF5 File button and open the SaveTest.h5 file that we just created.

Verify that the file contains datasets named data0, data1 and data2.

-

Click the Close HDF5 File button.

-

Choose File→Save Experiment and save the current work environment in the HDF5 Tour.pxp experiment file.

This concludes the HDF5 Guided Tour.

Where To Go From Here

If you are new to Igor or have never done it you should definitely do the Igor Guided Tour. If you are in a hurry, do just the first half of it.

You should also read the following help files which explain the basics of Igor in more detail:

Getting Help, Experiments, Files and Folders, Windows, Waves

To get started with Igor programming you need to read these help sections:

Working With Commands, Programming Overview, User-Defined Functions

For HDF5-specific programming, you need to have at least a basic understanding of HDF5. See the links in the next section. Then you need to familiarize yourself with the HDF5-related operations and functions listed in HDF5 Operations and Functions.

If you run into problems, send a sample HDF5 file along with a description of what you are trying to do to support@wavemetrics.com and we will try to get you started in the right direction.

Learning More About HDF5

In order to use HDF5 operations, you must have at least a basic understanding of HDF5. The HDF5 web site provides an abundance of material. To get started, visit this web page:

https://support.hdfgroup.org/documentation/hdf5/latest/_learn_basics.html

The HDF Group provides a Java-based program called HDFView. You may want to download and install HDFView so that you can easily browse HDF5 files as you read the introductory material. Or you may prefer to use the HDF5 browser provided by Igor.

The HDF5 Browser

Igor Pro includes an automatically-loaded procedure file, "HDF5 Browser.ipf", which implements an HDF5 browser. The browser lets you interactively examine HDF5 files to get an idea of what is in them. It also lets you load HDF5 datasets and groups into Igor and save Igor waves and data folders in HDF5 files.

The browser currently does not support creating attributes. For that you must use the HDF5SaveData operation.

The HDF5 browser includes lists which display:

- All groups in the file

- All attributes of the selected group

- All datasets in the selected group

- All attributes of the selected dataset

In addition, the HDF5 browser optionally displays:

- A graph displaying the selected dataset or attribute

- A table displaying the selected dataset or attribute

- A notebook window containing a dump of the selected group, dataset or attribute

Using The HDF5 Browser

To browse HDF5 files, choose Data→Load Waves→New HDF5 Browser. This creates an HDF5 browser control panel. You can create additional browsers by choosing the same menu item again.

Each browser control panel lets you browse one HDF5 file at a time. For most users, one browser will be sufficient.

After creating a new browser, click the Open HDF5 File button to choose the file to browse.

The HDF5 browser contains four lists which display the groups, group attributes, datasets and dataset attributes in the file being browsed.

HDF5 Browser Basic Controls

Here is a description of the basic controls in the HDF5 browser that most users will use.

Create HDF5 File

Creates a new HDF5 file and opens it for read/write.

Open HDF5 File

Opens an existing HDF5 file for read-only or read/write, depending on the state of the Read Only checkbox.

Close HDF5 File

Closes the HDF5 file.

Show Graph

If you click the Show Graph button, the browser displays a preview graph of subsequent datasets or attributes that you select.

Show Table

If you click the Show Table button, the browser displays a preview table of subsequent datasets or attributes that you select.

Load Dataset

The Load Dataset button loads the currently selected dataset into the current data folder.

Load Dataset Options

This section of the browser contains two popup menus that determine if data that you load by clicking the Load Dataset button is displayed in a table or graph.

The Table popup menu contains three items: No Table, Display in New Table, and Append To Top Table. If you choose Append To Top Table and there are no tables, it acts as if you chose Display in New Table.

The Graph popup menu contains three items: No Graph, Display in New Graph, and Append To Top Graph. If you choose Append To Top Graph and there are no graphs, it acts as if you chose Display in New Graph. Appending is useful when you are loading 1D data but of little use when appending multi-dimensional data. Multi-dimensional data is appended as an image plot which obscures anything that was already in the graph.

Text waves are not displayed in graphs even if Display in New Graph or Append To Top Graph is selected.

Save Waves

Displays a panel that allows you to select and save waves in the HDF5 file, provided the file was open for read/write.

Transpose 2D

If the Transpose 2D checkbox is checked, 2D datasets are transposed to compensate for the difference in how Igor and other programs treat rows and columns in an image plot. See HDF5 Images Versus Igor Images for details. This does not affect the loading of "formal" images (images formatted according to the HDF5 Image and Palette Specification).

Sort By Creation Order If Possible

If checked, and if the file supports listing by creation order, the HDF5 Browser displays and loads groups and datasets in creation order.

Most HDF5 files do not include creation-order information and so are listed and loaded in alphabetical order even if this checkbox is checked. However, HDF5 files written by Igor Pro 9 or later include creation-order information and so can be listed and loaded in creation order.

Load Group

The Load Group button loads all of the datasets in the currently selected group into a new data folder inside current data folder.

The Load Group button calls the HDF5LoadGroup operation using the /IMAG flag. This means that, if the group contains a formal image (see HDF5 Images Versus Igor Images), it is be loaded as a formal image.

The Hyperselection controls do not apply to the operation of the Load Group button.

Load Groups Recursively

If the Load Groups Recursively checkbox is checked, when the Load Group button is pressed any subgroups in the currently selected group are loaded as sub-datafolders.

Load Group Only Once

Because an HDF5 file is a "directed graph" rather than a strict hierarchy, a given group in an HDF5 file can appear in more than one location in the file's hierarchy.

If the Load Group Only Once checkbox is checked, the Load Group button loads a given group only the first time it is encountered. If it is unchecked, the Load Group button loads a given group each time it appears in the file's hierarchy resulting in duplicated data. If in doubt, leave Load Group Only Once checked.

Save Data Folder

Displays a panel that allows you to select a single data folder and save it in the HDF5 file, provided the file was open for read/write.

HDF5 Browser Contextual Menus

If you right-click a row in the Datasets, Dataset Attributes or Group Attributes lists, the browser displays a contextual menu that allows you to perform the following actions:

-

Copy the selected dataset or attribute's value to the clipboard as text

-

Load the selected dataset or attribute as a wave

-

Load all datasets or attributes as a waves

These popup menus also appear if you click the ▼ icons above the lists. They work the same as the HDF5 Browser contextual menus described above.

When the Datasets list is active, choosing Load Selected Dataset as Wave does the same thing as clicking the Load Dataset button.

When copying data to the clipboard, the data is copied as text and consequently may not represent the full precision of the underlying dataset or attribute. Not all datatypes are supported. If the browser supports the datatype that you right clicked, the contextual menu shows "Copy to Clipboard as Text". If the datatype is not supported, it shows "Can't Copy This Data Type".

When loading as waves, any pre-existing waves with the same names are overridden.

When loading waves from datasets, the table and graph options in the Load Dataset Options section of the browser apply. If you check Apply to Attributes also, the options apply when loading waves from attributes.

HDF5 Browser Advanced Controls

Here is a description of the advanced controls in the HDF5 browser. These are for use by people familiar with the HDF5 file format.

Show Dump

If you click the Show Dump button, the browser displays a notebook in which you can see additional details about subsequent groups, datasets or attributes that you select. The dump window is updated each time you select a group, dataset or attribute from any of the lists.

Show Data In Dump

When unchecked, the dump shows header information but not the actual data of a dataset. When checked it shows data as well as header information for a dataset.

If you check the Show Data In Dump checkbox and choose to dump a very large dataset, the dump could take a very long time. If the dump seems to be taking forever, clicking the Abort button in the Igor status bar.

Even if the Show Data In Dump checkbox is checked, the dump for a group consist of the header information only and omits the actual data for datasets and attributes.

Show Attributes In Dump

The Show Attributes In Dump checkbox lets you determine whether attributes are dumped when you select a group or dataset. When checked, information about any attributes associated with the dataset is included in the dump. This checkbox does not affect what is dumped when you select an item in the group or dataset attribute lists.

Show Properties In Dump

The Show Properties In Dump checkbox lets you see properties such as storage layout and filters (compression). This information is usually of little interest but is useful when investigating the effects of compression.

Use Hyperselection

If you check the Use Hyperselection checkbox and enter a path to a "hyperslab wave", the HDF5 Browser uses the hyperselection in the wave to load a subset of subsequent datasets or attributes that you click. This is a feature for advanced users who understand HDF5 hyperselections and have read the HDF5 Dataset Subsets discussion below.

The hyperselection is used when you click the Load Dataset button but not when you click the Load Group button.

HDF5 Browser Dump Technical Details

The dump notebook displays a dump of the selected group, dataset or attribute in "Data Description Language" (DDL) format. For most purposes you will not need the dump window. It is useful for experts who are trying to debug a problem or for people who are trying to understand the nuts and bolts of HDF5.

Sometimes strings in HDF5 files contain a large number of trailing nulls. These are not displayed in the dump window.

Sometimes strings in HDF5 files contain the literal strings "\r", "\n" and "\t" to represent carriage return, linefeed and tab. To improve readability, in the dump window these literal strings are displayed as actual carriage returns, linefeeds and tabs.

HDF5 Operations and Functions

This section lists Igor's HDF5-related operations and functions:

| HDF5CreateFile | Creates a new HDF5 file or overwrites an existing file. | |

| HDF5OpenFile | Opens an HDF5 file, returning a file ID that is passed to other operations and functions. | |

| HDF5CloseFile | Closes an HDF5 file or all open HDF5 files. | |

| HDF5FlushFile | Flushes an HDF5 file or all open HDF5 files. | |

| HDF5CreateGroup | Creates a group in an HDF5 file, returning a group ID that can be passed to other operations and functions. | |

| HDF5OpenGroup | Opens an existing HDF5 group, returning a group ID that can be passed to other operations and functions. | |

| HDF5ListGroup | Lists all objects in a group. | |

| HDF5CloseGroup | Closes an HDF5 group. | |

| HDF5LinkInfo | Returns information about an HDF5 link. | |

| HDF5ListAttributes | Lists all attributes associated with a group, dataset or datatype. | |

| HDF5AttributeInfo | Returns information about an HDF5 attribute. | |

| HDF5DatasetInfo | Returns information about an HDF5 dataset. | |

| HDF5LoadData | Loads data from an HDF5 dataset or attribute into Igor. | |

| HDF5LoadImage | Loads an image written according to the HDF5 Image and Palette Specification version 1.2. | |

| HDF5LoadGroup | Loads an HDF5 group and its datasets into an Igor Pro data folder. | |

| HDF5SaveData | Saves Igor waves in an HDF5 file. | |

| HDF5SaveImage | Saves an image in the format specified by the HDF5 Image and Palette Specification version 1.2. | |

| HDF5SaveGroup | Saves an Igor data folder in an HDF5 group. | |

| HDF5TypeInfo | Returns information about an HDF5 datatype. | |

| HDF5CreateLink | Creates a new hard, soft or external link. | |

| HDF5UnlinkObject | Unlinks an object (a group, dataset, datatype or link) from an HDF5 file. This deletes the object from the file's hierarchy but does not free up the space in the file used by the object. | |

| HDF5DimensionScale | Supports the creation and querying of HDF5 dimension scales. | |

| HDF5LibraryInfo | Returns information about the HDF5 library used by Igor. This is of interest to advanced programmers only. | |

| HDF5Control | Provides control of aspects of Igor's use of the HDF5 file format. | |

| HDF5Dump | Returns a DDL-format dump of a group, dataset or attribute. | |

| HDF5DumpErrors | Returns information about HDF5-related errors encountered by Igor. This is a diagnostic tool for experts that is needed only in rare cases. | |

HDF5 Procedure Files

Igor ships with two procedure files to support HDF5 use and programming. Both files are automatically loaded by Igor on launch and consequently are always available.

"HDF5 Browser.ipf" implements the HDF5 Browser. This procedure file is an independent module and consequently is normally hidden. If you are an Igor programmer who wants to inspect the procedure file, see Independent Modules for background information. However, there is no reason for you to call routines in "HDF5 Browser.ipf" from your own code.

"HDF5 Utilities.ipf" is a public procedure file (i.e., not an independent module) that defines HDF5-related constants and provides HDF5-related utility routines that may be of use if you write procedures that use HDF5 features.

If you write your own procedure file, you can use the constants and utility routines in "HDF5 Utilities.ipf" without #including anything. However, if you are creating your own independent module for HDF5 programming, you will need to #include "HDF5 Utilities.ipf" into your independent module - see "HDF5 Browser.ipf" for an example.

HDF5 Attributes

An attribute is a piece of data attached to an HDF5 group, dataset or named datatype.

To load an attribute, you need to use the HDF5LoadData operation with the /A=attributeNameStr flag and the /TYPE=objectType flag.

Loading attributes of type H5T_COMPOUND (compound - i.e., structure) is not supported.

Loading an HDF5 Numeric Attribute

This function illustrates how to load a numeric attribute of a group or dataset. The function result is an error code. The value of the attribute is returned via the pass-by-reference attributeValue numeric parameter.

Function LoadHDF5NumericAttribute(pathName, filePath, groupPath, objectName, objectType, attributeName, attributeValue)

String pathName // Symbolic path name - can be "" if filePath is a full path

String filePath // file name or partial path relative to symbolic path, or full path to file

String groupPath // Path to group, such as "/", "/my_group"

String objectName // Name of group or dataset

Variable objectType // 1=group, 2=dataset

String attributeName // Name of attribute

Variable& attributeValue // Output - pass-by-reference parameter

attributeValue = NaN

Variable result = 0

// Open the HDF5 file

Variable fileID // HDF5 file ID will be stored here

HDF5OpenFile /P=$pathName /R /Z fileID as filePath

if (V_flag != 0)

Print "HDF5OpenFile failed"

return -1

endif

Variable groupID // HDF5 group ID will be stored here

HDF5OpenGroup /Z fileID, groupPath, groupID

if (V_flag != 0)

Print "HDF5OpenGroup failed"

HDF5CloseFile fileID

return -1

endif

HDF5LoadData /O /A=attributeName /TYPE=(objectType) /N=tempAttributeWave /Q /Z groupID, objectName

result = V_flag // 0 if OK or non-zero error code

if (result == 0)

Wave tempAttributeWave

if (WaveType(tempAttributeWave) == 0)

attributeValue = NaN // Attribute is string, not numeric

result = -1

else

attributeValue = tempAttributeWave[0]

endif

KillWaves/Z tempAttributeWave

endif

// Close the HDF5 group

HDF5CloseGroup groupID

// Close the HDF5 file

HDF5CloseFile fileID

return result

End

Loading an HDF5 String Attribute

This function illustrates how to load a string attribute of a group or dataset. The function result is an error code. The value of the attribute is returned via the pass-by-reference attributeValue string parameter.

Function LoadHDF5StringAttribute(pathName, filePath, groupPath, objectName, objectType, attributeName, attributeValue)

String pathName // Symbolic path name - can be "" if filePath is a full path

String filePath // file name or partial path relative to symbolic path, or full path to file

String groupPath // Path to group, such as "/", "/metadata_group"

String objectName // Name of group or dataset

Variable objectType // 1=group, 2=dataset

String attributeName // Name of attribute

String& attributeValue // Output - pass-by-reference parameter

attributeValue = ""

Variable result = 0

// Open the HDF5 file

Variable fileID // HDF5 file ID will be stored here

HDF5OpenFile /P=$pathName /R /Z fileID as filePath

if (V_flag != 0)

Print "HDF5OpenFile failed"

return -1

endif

Variable groupID // HDF5 group ID will be stored here

HDF5OpenGroup /Z fileID, groupPath, groupID

if (V_flag != 0)

Print "HDF5OpenGroup failed"

HDF5CloseFile fileID

return -1

endif

HDF5LoadData /O /A=attributeName /TYPE=(objectType) /N=tempAttributeWave /Q /Z groupID, objectName

result = V_flag // 0 if OK or non-zero error code

if (result == 0)

Wave/T tempAttributeWave

if (WaveType(tempAttributeWave) != 0)

attributeValue = "" // Attribute is numeric, not string

result = -1

else

attributeValue = tempAttributeWave[0]

endif

KillWaves/Z tempAttributeWave

endif

// Close the HDF5 group

HDF5CloseGroup groupID

// Close the HDF5 file

HDF5CloseFile fileID

return result

End

Loading All Attributes of an HDF5 Group or Dataset

This function illustrates loading all of the attributes of a given group or dataset. The attributes are loaded into waves in the current data folder.

Function LoadHDF5Attributes(pathName, filePath, groupPath, objectName, objectType, verbose)

String pathName // Symbolic path name - can be "" if filePath is a full path

String filePath // file name or partial path relative to symbolic path, or full path to file

String groupPath // Path to group, such as "/", "/metadata_group"

String objectName // Name of object whose attributes you want or "." for the group specified by groupPath

Variable objectType // The type of object referenced by objectPath: 1=group, 2=dataset

Variable verbose // Bit 0: Print errors; Bit 1: Print warnings; Bit 2: Print routine info

Variable printErrors = verbose & 1

Variable printWarnings = verbose & 2

Variable printRoutine = verbose & 4

Variable result = 0 // 0 means no error

// Open the HDF5 file

Variable fileID // HDF5 file ID will be stored here

HDF5OpenFile /P=$pathName /R /Z fileID as filePath

if (V_flag != 0)

if (printErrors)

Print "HDF5OpenFile failed"

endif

return -1

endif

Variable groupID // HDF5 group ID will be stored here

HDF5OpenGroup /Z fileID, groupPath, groupID

if (V_flag != 0)

if (printErrors)

Print "HDF5OpenGroup failed"

endif

HDF5CloseFile fileID

return -1

endif

HDF5ListAttributes /TYPE=(objectType) groupID, objectName

if (V_Flag != 0)

if (printErrors)

Print "HDF5ListAttributes failed"

endif

HDF5CloseGroup groupID

HDF5CloseFile fileID

return -1

endif

Variable numAttributes = ItemsInList(S_HDF5ListAttributes)

Variable i

for(i=0; i<numAttributes; i+=1)

String attributeNameStr = StringFromList(i, S_HDF5ListAttributes)

STRUCT HDF5DataInfo di

InitHDF5DataInfo(di) // Initialize structure

HDF5AttributeInfo(groupID, objectName, objectType, attributeNameStr, 0, di)

Variable doLoad = 0

switch(di.datatype_class)

case H5T_INTEGER:

case H5T_FLOAT:

case H5T_TIME: // Not yet tested

case H5T_STRING:

case H5T_BITFIELD: // Not yet tested

case H5T_OPAQUE: // Not yet tested

case H5T_REFERENCE:

case H5T_ENUM: // Not yet tested

case H5T_ARRAY: // Not yet tested

doLoad = 1

break

case H5T_COMPOUND: // HDF5LoadData cannot load a compound attribute

doLoad = 0

break

endswitch

if (!doLoad)

if (printWarnings)

Printf "Not loading attribute %s - class %s not supported\r", attributeNameStr, di.datatype_class_str

endif

continue

endif

HDF5LoadData /O /A=attributeNameStr /TYPE=(objectType) /Q /Z groupID, objectName

if (V_flag != 0)

if (printErrors)

Print "HDF5LoadData failed"

endif

result = -1

break

endif

if (printRoutine)

Printf "Loaded attribute %d, name=%s\r", i, attributeNameStr

endif

endfor

// Close the HDF5 group

HDF5CloseGroup groupID

// Close the HDF5 file

HDF5CloseFile fileID

return result

End

HDF5 Dataset Subsets

It is possible, although usually not necessary, to load a subset of an HDF5 dataset using a "hyperslab". To use this feature, you must have a good understanding of hyperslabs which are explained in the HDF5 documentation. The examples below assume that you have read and understood that documentation.

HDF5 does not support loading a subset of an attribute.

To load a subset of a dataset, use the /SLAB flag of the HDF5LoadData operation. The /SLAB flag takes as its parameter a "slab wave". This is a two-dimensional wave containing exactly four columns and at least as many rows as there are dimensions in the dataset you are loading.

The four columns of the slab wave correspond to the start, stride, count and block parameters to the HDF5 H5Sselect_hyperslab routine from the HDF5 library.

The following examples illustrate how to use a hyperslab. The examples use a sample file provided by NCSA and stored in Igor's HDF5 Samples directory.

Create an Igor symbolic path (Misc→New Symbolic Path) named HDF5Samples which points to the folder containing the i32le.h5 file (Igor Pro X Folder:Examples:Feature Demos:HDF5 Samples).

In the first example, we load a 2D dataset named "TestArray" which has 6 rows and 5 columns. We start by loading the entire dataset without using a hyperslab. You can execute the following commands by selecting them and pressing Ctrl-Enter.

Variable fileID

HDF5OpenFile/P=HDF5Samples /R fileID as "i32le.h5"

HDF5LoadData /N=TestWave fileID, "TestArray"

Edit TestWave

Now we create a slab wave and set its dimension labels to make it easier to remember which column holds which type of information. We will use a utility routine in the automatically-loaded "HDF5 Utiliies.ipf" procedure file which is automatically loaded when Igor is launched:

HDF5MakeHyperslabWave("root:slab", 2) // In HDF5 Utilities.ipf

Edit root:slab.ld

Now we set the values of the slab wave to give the same result as before, that is, to load the entire dataset, and then we load the data again using the slab.

slab[][%Start] = 0 // Start at zero for all dimensions

slab[][%Stride] = 1 // Use a stride of 1 for all dimensions

slab[][%Count] = 1 // Use a count of 1 for all dimensions

slab[0][%Block] = 6 // Set block size for the dimension 0 to 6

slab[1][%Block] = 5 // Set block size for the dimension 1 to 5

TestWave = -1 // So we can see the next command change it

HDF5LoadData /N=TestWave /O /SLAB=slab fileID, "TestArray"

Here we set the stride, count and block parameters to load every other element from both dimensions.

slab[][%Start] = 0 // Start at zero for all dimensions

slab[][%Stride] = 2 // Use a stride of 2 for all dimensions

slab[0][%Count] = 3 // Load three blocks of dimension 0

slab[1][%Count] = 2 // Load two blocks of dimension 1

slab[0][%Block] = 1 // Set block size for dimension 0 to 1

slab[1][%Block] = 1 // Set block size for dimension 1 to 1

TestWave = -1 // So we can see the next command change it

HDF5LoadData /N=TestWave /O /SLAB=slab fileID, "TestArray"

Finally we close the file.

HDF5CloseFile fileID

Each row of the slab wave holds a set of parameters (start, stride, count and block) for the corresponding dimension of the dataset in the file. Row 0 of the slab wave holds the parameters for dimension 0 of the dataset, and so on.

The start values must be greater than or equal to zero. All of the other values must be greater than or equal to 1. All values must be less than 2 billion.

HDF5LoadData clips the values supplied for the block sizes to the corresponding sizes of dataset being loaded.

HDF5LoadData requires that the slab wave have exactly four columns. The HDF5MakeHyperslabWave function, from automatically-loaded "HDF5 Utiliies.ipf" procedure file, creates a four-column wave with the column dimension labels Start, Stride, Count, and Block. HDF5LoadData does not require the column dimension labels. As of Igor Pro 9.00, HDF5MakeHyperslabWave returns a free wave if you pass "" for the path parameter. It also returns a wave reference as the function result whether the wave is free or not.

The slab wave must have at least as many rows as the dataset has dimensions. Extra rows are ignored.

HDF5LoadData creates a wave just big enough to hold all of the loaded data. So in the first example, it created a 6 x 5 wave whereas in the last example it created a 3 x 2 wave.

Igor Versus HDF5

This section documents differences in how Igor and HDF5 organize data and how Igor reconciles them.

Case Sensitivity

Igor is not case-sensitive but HDF5 is. So, for example, when you specify the name of an HDF5 data set, case matters. "/Dataset1" is not the same as "/dataset1".

If you load /Dataset1 and /dataset1 into Igor using the default wave name, the second load overwrites the wave created by the first.

HDF5 Object Names Versus Igor Object Names

The forward slash character is not allowed in HDF5 object names. If you create an Igor wave or data folder with a name containing a forward slash and attempt to save the object to an HDF5 file, you will get an error. An object name that starts with a dot may also create an error.

As of this writing, the section 4.2.3, "HDF5 Path Names" of the HDF5 User's Guide says:

Component link names may be any string of ASCII characters not containing a slash or a dot (/and ., which are reserved as noted above). However, users are advised to avoid the use of punctuation and non-printing characters, as they can create problems for other software.

HDF5 Data Types Versus Igor Data Types

HDF5 supports many data types that Igor does not support. When loading such data types, Igor attempts to convert to a data type that it supports, if possible. In the process, precision may be lost.

By default and for backward compatibility, Igor saves and loads HDF5 64-bit integer data as double-precision floating point. Precision may be lost in this conversion. To save and load 64-bit integer data as 64-bit integer, use /OPTS=1 with HDF5SaveData, HDF5SaveGroup, HDF5LoadData and HDF5LoadGroup. Because most operations are carried out in Igor in double-precision floating point, we recommend loading 64-bit integer as double if it fits in 53 bits and as 64-bit integer if it may exceed 53 bits.

Since Igor does not currently support 128-bit floating point data (long double), Igor loads HDF5 128-bit floating point data as double-precision floating point. Precision is lost in this conversion.

HDF5 Max Dimensions Versus Igor Max Dimensions

The HDF5 library supports data with up to 32 dimensions. Igor supports only four dimensions. If you load HDF5 data whose dimensionality is greater than four, the HDF5LoadData operation creates a 4D wave with enough chunks to hold all of the data. ("Chunk" is the name of the fourth dimension in Igor, after "row", "column" and "layer".)

For example, if you load a dataset whose dimensions are 7x6x5x4x3x2, HDF5LoadData creates a wave with dimensions 7x6x5x24. The wave has 24 chunks. The number 24 comes from multiplying the number of chunks in the HDF5 dataset (4 in this case) by the size of each higher dimension (3 and 2 in this case).

Row-major Versus Column-major Data Order

HDF5 and Igor store multi-dimensional data differently in memory. For almost all purposes, this difference is immaterial. For those rare cases in which it matters, here is an explanation.

HDF5 stores multi-dimensional data in "row-major" order. This means that the index associated with the highest dimension changes fastest as you move in memory from one element to the next. For example, if you have a two-dimensional dataset with 5 rows and 4 columns, as you move sequentially through memory, the column index changes fastest and the row index slowest.

Igor stores data in "column-major" order. This means that the index associated with the lowest dimension changes fastest as you move in memory from one element to the next. For example, if you have a two-dimensional dataset with 5 rows and 4 columns, as you move sequentially through memory, the row index changes fastest and the column index slowest.

To work around this difference, after the HDF5LoadData or HDF5LoadGroup operation initially loads data with two or more dimensions, it shuffles the data around in memory. The result is that the data when viewed in an Igor table looks just as it would if viewed in the HDF Group's HDFView program although its layout in memory is different. A similar accomodation occurs when you save Igor data using HDF5SaveData or HDF5SaveGroup.

HDF5 Images Versus Igor Images

The HDF5 Image and Palette Specification provides detailed guidelines for storing an image and its associated information (e.g., palette, color model) in an HDF5 file. However many HDF5 users do not follow the specification and just write image data to a regular 2D dataset. To distinguish between these two ways of storing an image, we use the term "formal image" to refer to an image written to the specification and "regular image" to refer to regular 2D datasets which the user thinks of as an image.

In an Igor image plot the wave's column data is plotted horizontally while in HDFView and most other programs the row data is plotted horizontally. Therefore, without special handling, a regular image would appear rotated in Igor relative to most programs.

The HDF5LoadImage and HDF5SaveImage operations handle loading and saving formal images. These operations automatically compensate for the difference in image orientation.

If you are dealing with a regular image, you will use the HDF5LoadData and HDF5SaveData operations, or HDF5LoadGroup and HDF5SaveGroup. These operations have a /TRAN flag which causes 2D data to be transposed. When you use /TRAN with HDF5LoadData, images viewed in Igor and in programs like HDFView will have the same orientation but will appear transposed when viewed in a table.

The /TRAN flag works with 2D and higher-dimensioned data. When used with higher-dimensioned data (3D or 4D), each layer of the data is treated as a separate image and is transposed. In other words, /TRAN treats higher-dimensioned data as a stack of images.

Saving and Reloading Igor Data

The HDF5SaveData and HDF5SaveGroup operations can save Igor waves, numeric variables and string variables in HDF5 files. All of these Igor objects are written as HDF5 datasets.

The datasets saved from Igor waves are, by default, marked with attributes that store wave properties such as the wave data type, the wave scaling and the wave note. The attributes have names like IGORWaveType and IGORWaveScaling. This allows HDF5LoadData and HDF5LoadGroup to fully recreate the Igor wave if it is later read from the HDF5 file back into Igor. You can suppress the creation of these attributes by using the /IGOR=0 flag when calling HDF5SaveData or HDF5SaveGroup.

Wave text is always written using UTF-8 text encoding. See HDF5 Wave Text Encoding for details.

Wave reference waves and data folder reference waves are read as such when you load an HDF5 packed experiment but HDF5LoadData and HDF5LoadGroup load these waves as double-precision numeric. The reason for this is that restoring such waves so that they point to the correct wave or data folder is is possible only when an entire experiment is loaded.

The datasets saved by HDF5SaveGroup from Igor variables are marked with an "IGORVariable" attribute. This allows HDF5LoadData and HDF5LoadGroup to recognize these datasets as representing Igor variables if you reload the file. In the absence of this attribute, these operations load all datasets as waves.

The value of the IGORVariable attribute is the data type code for the Igor variable. It is one of the following values:

| 0: | Igor string variable | |

| 4: | Igor real numeric variable | |

| 5: | Igor complex numeric variable | |

See also HDF5 String Variable Text Encoding.

Handling of Complex Waves

Igor Pro supports complex waves but HDF5 does not support complex datasets. Therefore, when saving a complex wave, HDF5SaveData writes the wave as if its number of rows were doubled. For example, HDF5SaveData writes the same data to the HDF5 file for these waves:

Make wave0 = {1,-1,2,-2,3,-3} // 6 scalar points

Make/C cwave0 = {cmplx(1,-1),cmplx(2,-2),cmplx(3,-3)} // 3 complex points

When reading an HDF5 file written by HDF5SaveData, you can determine if the original wave was complex by checking for the presence of the IGORComplexWave attributes that HDF5SaveData attaches to the dataset for a complex wave. HDF5LoadData and HDF5LoadGroup do this automatically if you use the appropriate /IGOR flag.

Handling of Igor Reference Waves

Igor Pro supports wave reference waves and data folder reference waves. Each element of a wave reference wave is a reference to another wave. Each element of a data folder reference wave is a reference to a data folder.

Igor correctly writes Igor reference waves when you save an experiment as HDF5 packed format, but the HDF5SaveData and HDF5SaveGroup do not support saving Igor reference waves.

The HDF5SaveData operation returns an error if you try to save a reference wave.

The behavior of the HDF5SaveGroup operation when it is asked to save a reference wave depends on the /CONT flag. By default (/CONT=1), it prints a note in the history saying it cannot save the wave and then continues saving the rest of the objects in the data folder. If /CONT=0 is used, HDF5SaveGroup returns an error if asked to save a reference wave.

HDF5 Multitasking

You can call HDF5 operations and functions from an Igor preemptive thread.

The HDF5 library is limited to accessing one HDF5 file at a time. This precludes loading multiple HDF5 files concurrently in a given Igor instance but it does allow you to load an HDF5 file in a preemptive thread while you do something else in Igor's main thread. If you create multiple threads that attempt to access HDF5 files, one of your threads will gain access to the HDF5 library. Your other HDF5-accessing threads will wait until the first thread finishes at which time another thread will gain access to the HDF5 library.

Igor HDF5 Capabilities

Igor supports only a subset of all of the HDF5 capability.

Here is a partial list of HDF5 features that Igor does support:

- Loading of all atomic datatypes.

- Loading of strings.

- Loading of array datatypes.

- Loading of variable-length datasets where the base type is integer or float.

- Loading of compound datasets (datasets consisting of C-like structures), including compound datasets containing members that are arrays.

- Use of hyperslabs to load subsets of datasets.

- Loading and saving object references.

- Loading dataset region references.

Igor HDF5 Limitations

Here is a partial list of HDF5 features that Igor does not support:

- Creating or appending to VLEN datasets (ragged arrays).

- Loading of deeply-nested datatypes. See HDF5 Nested Datatypes below.

- Saving dataset region references.

If Igor does not work with your HDF5 file, it could be due to a limitation in Igor. Send a sample file along with a description of what you are trying to do to support@wavemetrics.com and we will try to determine what the problem is.

Advanced HDF5 Data Types

This section is mostly of interest to advanced HDF5 users.

HDF5 Variable-Length Data

Most HDF5 files do not use variable-length datatypes so most users do not need to know this information.

Variable-length data consists of an array where each element is a 1D set of elements of another datatype, called the "base" datatype. The number of elements of the base datatype in each set can be different. For example, a 5 element variable-length dataset whose base type is H5T_STD_I32LE contains 5 1D sets of 32-bit, little-endian integers and the length of each set is independent.

HDF5LoadData loads variable-length datasets where the base type is integer or float only. The data for each element is loaded into a separate wave.

When loading most types of data, HDF5LoadData creates just one wave. When loading a variable-length dataset or attribute, one wave is created for each loaded element. If more than one element is to be loaded, the proposed name for the wave (the name of the dataset or attribute being loaded or a name specified by /N=name ) is treated as a base name. For example, if the dataset or attribute has three elements and name is test and the /O flag is used, waves named test0, test1 and test2 are created. If the /O flag is not used, names of the form test<n> are created where <n> is a number chosen to make the wave names unique.

HDF5LoadData operation supports loading a subset of a variable-length dataset. You do this by supplying a slab wave using the HDF5LoadData /SLAB flag. In the example from the previous paragraph, if you loaded just one element, its name would be test, not test0. If you loaded two elements, they would be named test0 and test1, regardless of which two elements you loaded.

This function demonstrates loading one element of a variable-length dataset. We assume that a symbolic path named Data and a file named "Vlen.h5" exist and that the file contains a 1D variable-length dataset named TestVlen that contains at least two elements. The function loads the second variable-length element into a wave named TestWave.

Function DemoVlenLoad()

Variable fileID

HDF5OpenFile /P=Data /R fileID as "Vlen.h5"

HDF5MakeHyperslabWave("root:slab", 1) // In HDF5 Utilities.ipf

Wave slab = root:slab

slab[0][%Start] = 1 // Start at second vlen element

slab[0][%Stride] = 1 // Use a stride of 1

slab[0][%Count] = 1 // Load 1 block

slab[0][%Block] = 1 // Set block size to 1

HDF5LoadData /N=TestWave /O /SLAB=slab fileID, "TestVlen"

HDF5CloseFile fileID

End

HDF5 Array Data

Most HDF5 files do not use array datatypes so most users do not need to know this information.

An HDF5 dataset (or attribute) consists of elements organized in one or more dimensions. Each element can be an atomic datatype, such as an unsigned short or a double-precision float, or it can be a composite datatype, such as a structure or an array. Thus, an HDF5 dataset can be an array of unsigned shorts, an array of doubles, an array of structures or an array of arrays. This section discusses loading this last type - an array of arrays.

In this case, the class of the dataset is H5T_ARRAY. The type of the dataset is something like "5 x 4 array of signed long" and "signed long" is said to be the "base type" of the array datatype. If the dataset itself is 1D with 10 rows then you would have a 10-row dataset, each element of which consists of one 5 x 4 array of signed longs.

In Igor this could be treated as a 3D wave consisting of 5 rows, 4 columns and 10 layers. However, since Igor supports only 4 dimensions while HDF5 supports 32, you could easily run out of dimensions in Igor. Therefore when you load an H5T_ARRAY-type dataset, HDF5LoadData creates a wave whose dimensionality is that of the array type, not that of the underlying dataset, except that the highest dimension is increased to make room for all of the data. This reduces the likelihood of running out of dimensions.

HDF5LoadData cannot load array data whose base type is compound or array (see HDF5 Nested Datatypes for details).

The following example illustrate how to load an array datatype. The example uses a sample file provided by NCSA and stored in Igor's HDF5 Samples directory.

Create an Igor symbolic path (Misc→New Symbolic Path) named HDF5Samples which points to the folder containing the SDS_array_type.h5 file (Igor Pro X Folder:Examples:Feature Demos:HDF5 Samples).

The sample file contains a 10 row 1D data set, each element of which is a 5 row by 4 column matrix of 32-bit signed big-endian integers (a.k.a., big-endian signed longs or I32BE).

When we load the dataset, we get 5 rows and 40 columns.

Variable fileID

HDF5OpenFile/P=HDF5Samples /R fileID as "SDS_array_type.h5"

HDF5LoadData fileID, "IntArray"

HDF5CloseFile fileID

Edit IntArray

The first four columns contain the 5x4 matrix from row zero of the dataset. The next four columns contain the 5x4 matrix from row one of the dataset. And so on.

In this case, Igor has enough dimensions so that you could, if you want, reorganize it as a 3D wave consisting of 10 layers of 5x4 matrices. You would do that using this command:

Redimension /N=(5,4,10) /E=1 IntArray

The /E=1 flag tells Redimension to change the dimensionality of the wave without actually changing the stored data. In Igor, the layout in memory of a 5x4x10 wave is the same as the layout of a 5x40 wave. The redimension merely changes the way we look at the wave from 40 columns of 5 rows to 10 layers of 4 columns of 5 rows.

Although you can load a dataset with an array datatype, Igor currently provides no way to write a datatype with an array datatype.

Loading HDF5 Reference Data

Most HDF5 files do not use reference datatypes so most users do not need to know this information.

An HDF5 dataset or attribute can contain references to other datasets, groups and named datatypes. There are two types of references: "object references" and "dataset region references". HDF5LoadData loads both types of references into text waves.

An element of an object reference dataset can refer to a dataset in the same or in another file. An element of a dataset region reference dataset can refer only to a dataset in the same file.

Loading HDF5 Object Reference Data

For each object reference to a dataset, HDF5LoadData returns "D:" plus the full path of the dataset within the HDF5 file, for example, "D:/GroupA/DatasetB".

For references to groups and named datatypes, HDFLoadData returns "G:" and "T:" respectively, followed by the path to the object.

Loading HDF5 Dataset Region Reference Data

This section is for advanced HDF5 users. A demo experiment, "HDF5 Dataset Region Demo.pxp", provides examples and utilities for dealing with dataset region references.

Prior to Igor Pro 9.00, HDF5LoadData returned the same thing when loading an object reference or a dataset region reference. It had this form:

<object type character>:<full path>

For a dataset, this might be something like

D:/Group1/Dataset3

In Igor Pro 9.00 and later, HDF5LoadData returns additional information for a dataset region reference. It has this form with the additional information shown in red:

<object type character>:<full path>.<region info>

For a dataset, this might be something like this with the additional information shown in red:

D:/Group1/Dataset3.REGIONTYPE=BLOCK;NUMDIMS=2;NUMELEMENTS=2;COORDS=0,0-0,2/0,11-0,13;

If you have code that depends on the pre-Igor 9 behavior, you can make it work with Igor 9 by using StringFromList with "." as list separator string to obtain the text preceding the dot character.

The region info string is a semicolon-separated keyword-value string constructed for ease of programmatic parsing. It consists of these parts:

REGIONTYPE=<type>

NUMDIMS=<number of dimensions>

NUMELEMENTS=<number of elements>

COORDS=<list of points> or <list of blocks>

<type> is POINT for a region defined as a set of points, BLOCK for a region defined as a set of blocks.

<number of dimensions> is the number of dimensions in the dataset.

<number of elements> is the number of points in a region defined as a set of points or the number of blocks in a region defined as a set of blocks.

<list of points> has the following format:

<point coordinates>/<point coordinates>/...

where <point coordinates> is a comma-separated list of coordinates.

For a 2D dataset with three selected points, this might look like this:

3,4/11,13/21,30

which specifies these three points:

row 3, column 4

row 11, column 13

row 21, column 30

<list of blocks> has the following format:

<coordinates>-<coordinates>/<coordinates>-<coordinates>/...

where <coordinates> is a comma-separated list of coordinates.

A dash appears between pairs of <coordinates>. The first set of coordinates of a pair specifies the starting coordinates of a block while the second set of coordinates of a pair specifies the ending coordinates of the block.

For a 2D dataset with three selected blocks, this might look like this:

3,4-6,7/11,13-15,17/21,30-37,38

which specifies these three blocks:

row 3, column 4 to row 6, column 7

row 11, column 13 to row 15, column 17

row 21, column 30 to row 37, column 38

Here is an example of a complete point dataset region info string with the additional information shown in red:

D:/Group1/Dataset3.REGIONTYPE=POINT;NUMDIMS=2;NUMELEMENTS=3;COORDS=3,4/11,13/21,30;

Here is an example of a complete block dataset region info string with the additional information shown in red:

D:/Group1/Dataset4.REGIONTYPE=BLOCK;NUMDIMS=2;NUMELEMENTS=3;COORDS=3,4-6,7/11,13-15/17/21,30-37/38;

The wave returned after calling HDF5LoadData on a two-row dataset region reference dataset would contain two rows of text like the examples just shown. Each row in the dataset region reference dataset refers to one dataset and to a set of points or blocks within that dataset.

The "HDF5 Dataset Region Demo.pxp" experiment provides further information including examples and utilities for dealing with dataset region references.

Saving HDF5 Object Reference Data

Most HDF5 files do not use reference datatypes so most users do not need to know this information.

An HDF5 dataset or attribute can contain references to other datasets, groups and named datatypes. These are called "object references". You can instruct HDF5SaveData to save a text wave as an object reference using the /REF flag. The /REF flag requires Igor Pro 8.03 or later.

The text to save as a reference must be formatted with a prefix character identifying the type of the referenced object followed by a full or partial path to the object: "G:", "D", or "T:" for groups, datatypes, and datasets respectively. For example:

Function DemoSaveReferences(pathName, fileName)

String pathName // Name of symbolic path

String fileName // Name of HDF5 file

Variable fileID

HDF5CreateFile/P=$pathName /O fileID as fileName

// Create a group to target using a reference

Variable groupID

HDF5CreateGroup fileID, "GroupA", groupID

// Create a dataset to target using a reference

Make/O/T textWave0 = {"One", "Two", "Three"}

HDF5SaveData /O /REF=(0) /IGOR=0 textWave0, groupID

// Write reference dataset to root using full paths

Make/O/T refWaveFull = {"G:/GroupA", "D:/GroupA/textWave0"}

HDF5SaveData /O /REF=(1) /IGOR=0 refWaveFull, fileID

HDF5CloseGroup groupID

HDF5CloseFile fileID

End

Partial paths are relative to the file ID or group ID passed to HDF5SaveData.

Saving HDF5 Dataset Region Reference Data

Igor Pro does not currently support saving dataset region references.

Loading HDF5 Enum Data

Most HDF5 files do not use enum datatypes so most users do not need to know this information.

Enum data values are stored in an HDF5 file as integers. The datatype associated with an enum dataset or attribute defines a mapping from an integer value to a name. HDF5LoadData can load either the integer data or the name for each element of enum data. You control this using the /ENUM=enumMode flag.

If /ENUM is omitted or enumMode is zero, HDF5LoadData creates a numeric wave with data type signed long and loads the integer enum values into it. This works for 8 bit, 16 bit and 32 bit integer enum data. Loading enum data based on 64-bit integers is not supported.

If enumMode is 1, HDF5LoadData creates a text wave and loads the name associated with each enum value into it. This is slower than loading the integer enum values but the speed penalty is significant only if you are loading a very large enum dataset or very many enum datasets.

Saving HDF5 Enum Data

Most HDF5 files do not use enum datatypes so most users do not need to know this information.

The ability to save Igor integer numeric data using an HDF5 enumeration data type was added in Igor Pro 9.01. You do this using the /ENUM flag of the HDF5SaveData operation. Neither the HDF5 Browser nor the HDF5SaveGroup support saving enum data.

Here is an example:

Function DemoSaveWaveAsEnum()

Variable fileID

HDF5CreateFile/P=IgorUserFiles /O fileID as "DemoSaveWaveAsEnum.h5"

Make/FREE/Y=(0x48) enumWave = {1,2,3} // 0x48 means unsigned byte data

String enumList = "One=1;Two=2;Three=3;" // Specifies the HDF5 enum datatype

HDF5SaveData /O /ENUM=enumList /IGOR=0 enumWave, fileID, "enumDataset"

HDF5CloseFile fileID

End